Introduction to AWA MWAA

Amazon Managed Workflows for Apache Airflow (MWAA) is a managed orchestration service for Apache Airflow. It makes it easier to set up and operate end-to-end data pipelines in the cloud at scale.

Apache Airflow is an open-source tool used to programmatically author, schedule, and monitor sequences of processes and tasks referred to as “workflows.” With Amazon MWAA, you can use Airflow and Python to create workflows without having to manage the underlying infrastructure for scalability, availability, and security.

Amazon MWAA automatically scales its workflow execution capacity to meet your needs, and is integrated with AWS security services to help provide you with fast and secure access to your data.

Prerequisites

To create an Amazon MWAA environment, you may want to take additional steps to ensure you have permission to the AWS resources you need to create.

- AWS account – An AWS account with permission to use Amazon MWAA and the AWS services and resources used by your environment.

- Amazon VPC – The Amazon VPC networking components are required by an Amazon MWAA environment.

- Amazon S3 bucket – An Amazon S3 bucket to store your DAGs and associated files, such as plugins.zip and requirements.txt. Your Amazon S3 bucket must be configured to block all public access, with Bucket Versioning enabled.

- AWS KMS key – An AWS KMS key for data encryption in your environment. You can choose the default option on the Amazon MWAA console

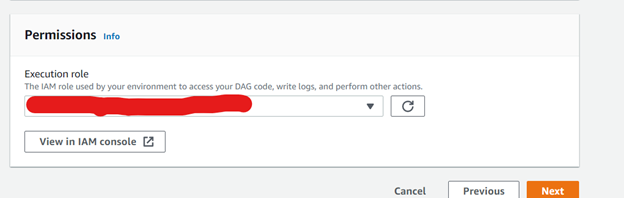

- Execution role – An execution role that allows Amazon MWAA to access AWS resources in your environment. You can choose the default option on the Amazon MWAA console to create an execution role when you create an environment.

- VPC security group – A VPC security group that allows Amazon MWAA to access other AWS resources in your VPC network.

Steps to Create AWS MWAA Environment

- Sign in to Your AWS Account

- Search For MWAA

- Click on Managed Apache Airflow

- Click on Create Environment

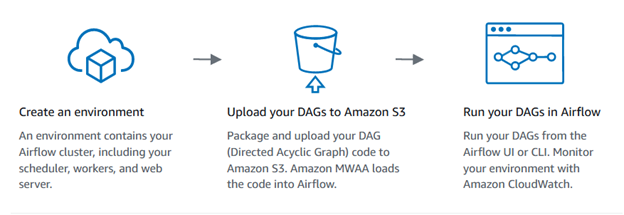

- How Amazon MWAA Works

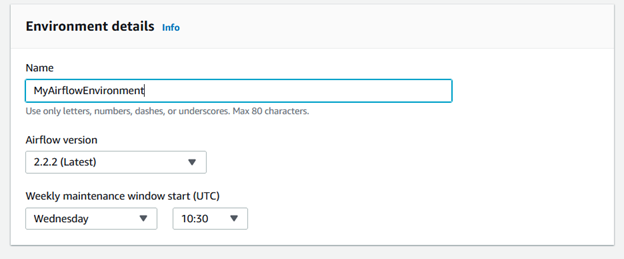

- Give the Name as You Requirement

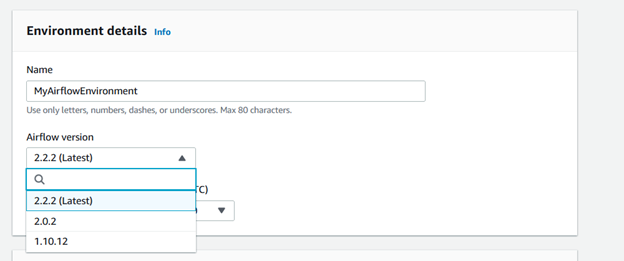

- Select The Airflow version According to Your needs

- Airflow Version Should be selected with Caution Because Every version has their own Requirements and Dependency

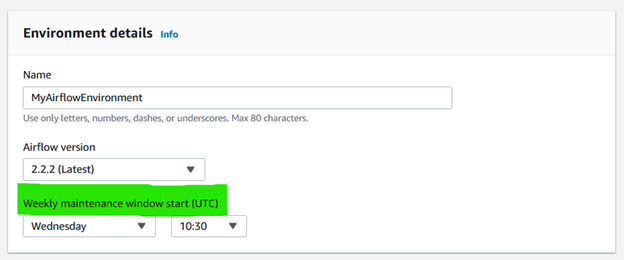

- Weekly Maintenance Window

- Weekly Maintenance windows should be Selected For that Day Which day Testers and Developer has fewer tasks to do because sometimes in Weekly Maintenance windows Some ETL need to be changed and Updated According to the Latest Requirements

-

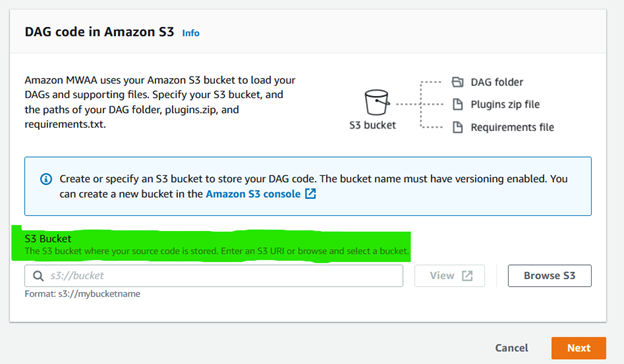

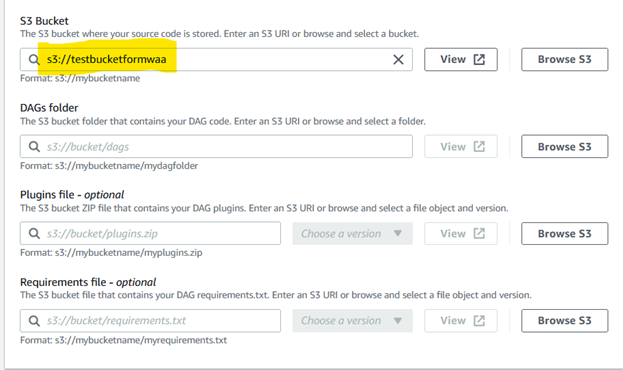

How Folder and File arrangements should be in S3 bucket for Amazon MWAA

- Click on Browse S3 to Select the bucket for MWAA

- Select the Created bucket For MWAA

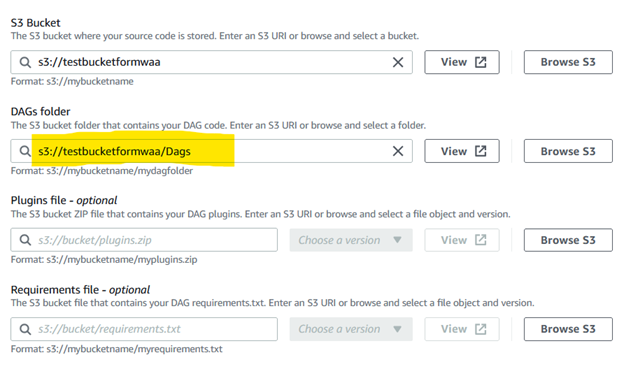

Select the Folder For DAGs inside the bucket

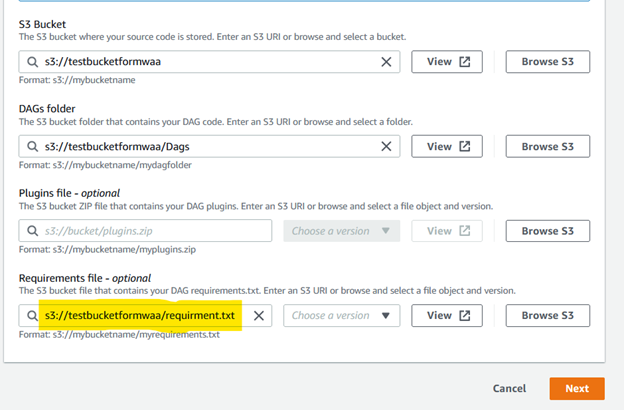

Provide the Path of the Requirment.txt For Required Packages and Their Versions

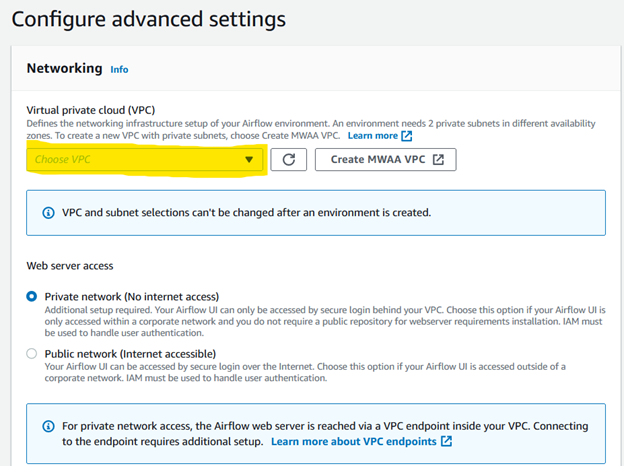

- Select the VPC and you Can Create a New VPC Also

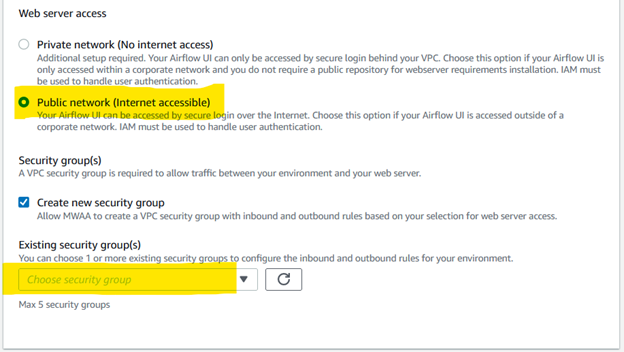

- Select the Web Server Access For Private or Public and When Selected Public Provide Security Group information

- Select the Security Group to Satisfy the Needs of MWAA

-

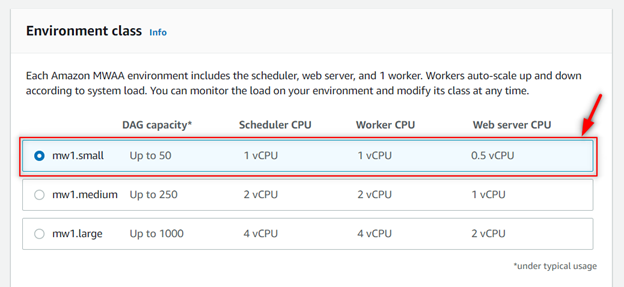

Environment Class: Select mw1.small For up to 50 DAGs

-

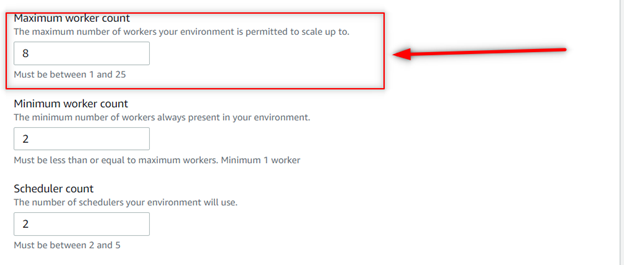

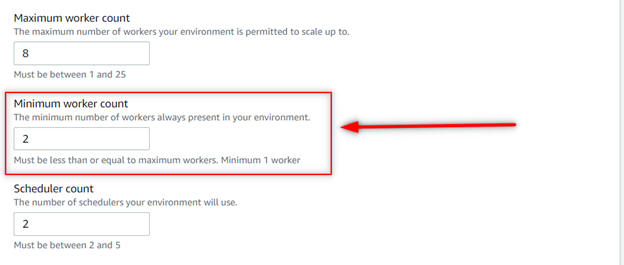

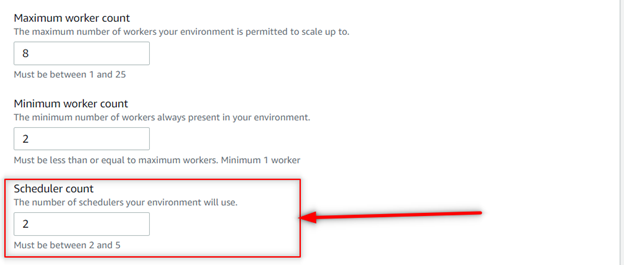

Maximum Worker Count in MWAA Depends on Need and the autoscaling mechanism automatically increases the number of Apache Airflow workers in response to running and queued tasks on your Amazon Managed Workflows for Apache Airflow (MWAA) environment and disposes of extra workers when there are no more tasks queued or executing

-

-

Mechanism used by Amazon MWAA For Worker’s

Amazon MWAA uses Running Tasks and Queued Tasks metrics, where (tasks running + tasks queued) / (tasks per worker) = (required workers).

-

-

Scheduler Count is should be Greater Than 1 Because if One Scheduler Goes Down Other Will Come and takes up the Place

Scheduler is the Core For Scheduling the Task in Line to be Done at Specified Time and play Very Critical Role at the Application Level

-

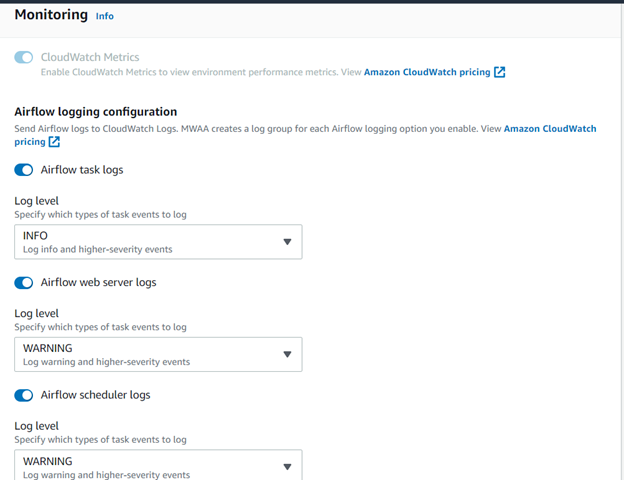

Amazon MWAA can send Apache Airflow logs to Amazon CloudWatch.

-

Apache Airflow logs need to be enabled on the Amazon Managed Workflows for Apache Airflow (MWAA) console to view Apache Airflow DAG processing, tasks, Web server, Worker logs in CloudWatch

- Only in the Airflow Worker Logs do We set the Logs at Error Which only Logs Error During the Execution by Worker other Log is set to Warning

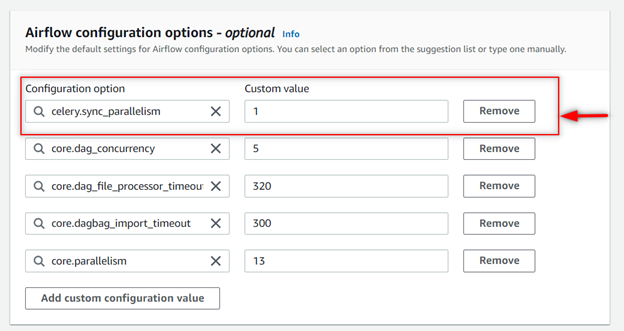

- Airflow Configuration Part

-

celery.sync_parallelism = 1

You can use this option to prevent queue conflicts by limiting the processes the Celery Executor uses. By default, a value is set to 1 to prevent errors in delivering task logs to CloudWatch Logs. Setting the value to 0 means using the maximum number of processes, but might cause errors when delivering task logs.

-

Airflow Configuration: core.dag_concurrency = 5

core = Folder Where airflow Pipeline Lives

dag_concurrency = The number of task instances allowed to run concurrently by the scheduler

-

Airflow Configuration: core.dag_file_processor_timeout = 320

How long before timing out a DagFileProcessor, which processes a dag file

-

Airflow Configuration: core.dagbag_import_timeout = 300

How long before timing out a python file import

-

Airflow Configuration: core.parallelism = 13

This defines the maximum number of task instances that can run concurrently in Airflow regardless of scheduler count and worker count. Generally, this value is reflective of the number of task instances with the running state in the metadata database.

- Provide the Role From Which all the Required Resource For MWAA Like S3, Glue and other Resource can be Accessed

- Click Next View all the Configuration and Click on Finish at the Bottom of the Page

How Requirment.txt works in AWS MWAA

- On Amazon MWAA, we install all Python dependencies by uploading a requirements.txt file to your Amazon S3 bucket,

- then specifying the version of the file on the Amazon MWAA console each time you update the file.

- Amazon MWAA runs pip3 install -r requirements.txt to install the Python dependencies on the Apache Airflow scheduler and each of the workers

Helpful Repo By AWS: https://github.com/aws/aws-mwaa-local-runner

Python dependency’s location and size limits

Size limit. We recommend a requirements.txt file that references libraries whose combined size is less than 1 GB. The more libraries Amazon MWAA needs to install, the longer the startup time on an environment. Although Amazon MWAA doesn’t limit the size of installed libraries explicitly, if dependencies can’t be installed within ten minutes, the Fargate service will time out and attempt to rollback the environment to a stable state

Specifying the requirements.txt version on the Amazon MWAA console

You need to specify the version of your requirements.txt file on the Amazon MWAA console each time you upload a new version of your requirements.txt in your Amazon S3 bucket.

-

Open the Environments page on the Amazon MWAA console.

-

Choose an environment.

-

Choose Edit.

-

On the DAG code in Amazon S3 pane, choose a requirements.txt version in the dropdown list.

-

Choose Next, Update environment.

You can begin using the new packages immediately after your environment finishes updating.

We Can test

the DAGs and Requirment.txt with an Amazon Managed Workflows for Apache Airflow (MWAA) environment locally.

Follow this Link: https://github.com/aws/aws-mwaa-local-runner

Prerequisites:

- Linux/Ubuntu: Install Docker Compose and Install Docker Engine.

- Windows: Windows Subsystem for Linux (WSL) to run the bash-based command mwaa-local-env. Please follow Windows Subsystem for Linux Installation (WSL) and Using Docker in WSL 2, to get started.

Steps for Setup:

-

Run the Following Commands

git clone https://github.com/aws/aws-mwaa-local-runner.gitcd aws-mwaa-local-runner

- Build a Docker Image

./mwaa-local-env build-image

- Edit the File

sudo vim mwaa-local-env

-

Edit From this:

AIRFLOW_VERSION=2.0.2

-

Edit to this:

AIRFLOW_VERSION=2_0_2

-

Start The ENV

./mwaa-local-env start

-

Testing the Requirment.txt

./mwaa-local-env test-requirements

Result

pawanrai852@LAPTOP-JKJKOORI:~/aws-mwaa-local-runner$ ./mwaa-local-env test-requirements

Container amazon/mwaa-local:2_0_2 not built. Building locally.

[+] Building 10.7s (20/20) FINISHED

Leave a Reply