| Report Notebook |

Uses AND Working

AWS Services

AWS BOTO3

AWS BOTO3(BRIEF SUMMARY)

- The SDK is composed of two key Python packages:

- Botocore (the library providing the low-level functionality shared between the Python SDK and the AWS CLI)

- Boto3 (the package implementing the Python SDK itself).

AWS BOTO3 USES

- We Can do multiple things with the help of boto3

- We can easily access and used large data from AWS S3 with the help of the boto3

- Only one basic requirement for the user is the key Credential’s for the

Verification and Security Purpose

- With the help of the Boto3 we can Create, delete and Edit every thing with right permissions

- Its Safe and Useful for the python data imports

How to Use ?

- Test for the working of the code on jupyter notebook

How to import

- Import boto3

- If boto3 is not installed

- Use : pip install boto3

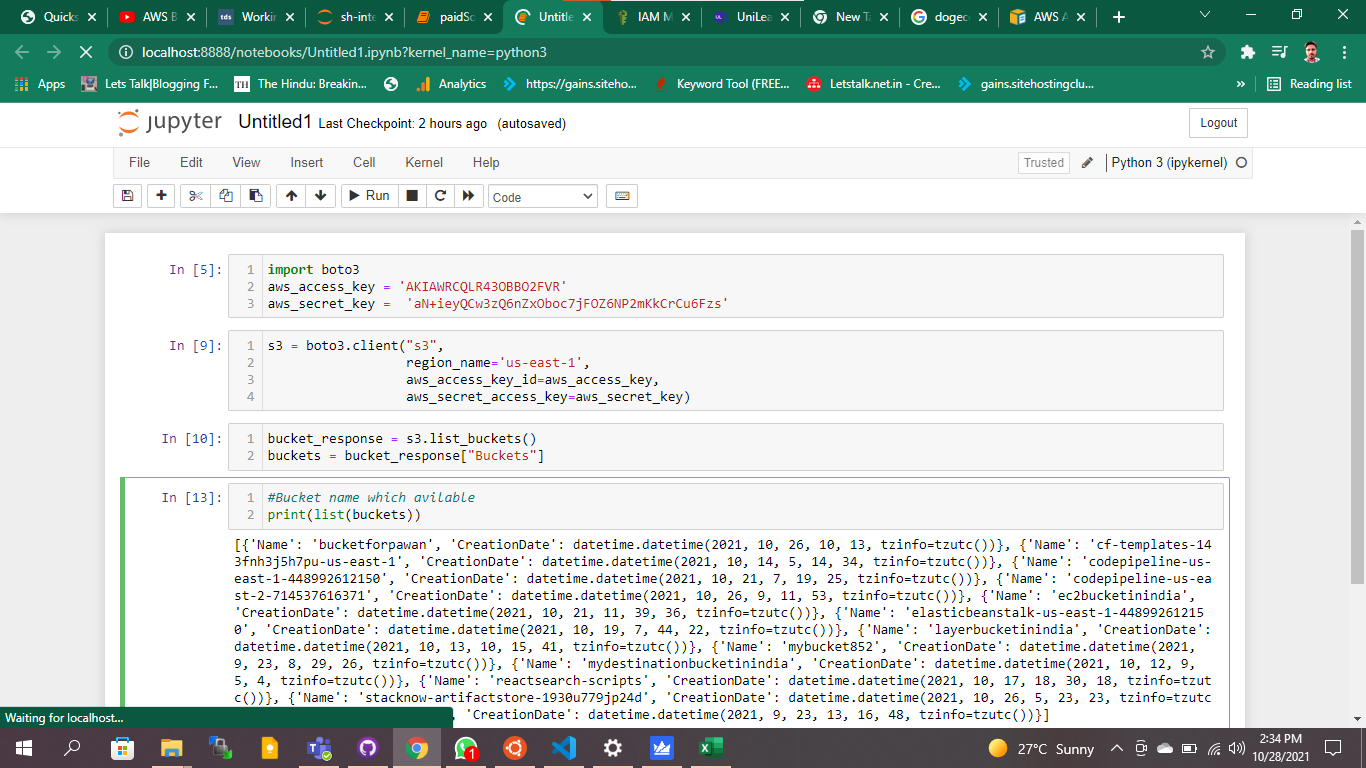

- How to List all the bucket available in AWS S3

- Code from above Picture

import boto3

aws_access_key = ‘xxxxxxxxxxxxxxxxxxxxx’

aws_secret_key = ‘aN+ieyQCw3zQ6nZxOboc7jxxxxxxxxxxx’

s3 = boto3.client(“s3”,

region_name=’us-east-1′,

aws_access_key_id=aws_access_key,

aws_secret_access_key=aws_secret_key)

bucket_response = s3.list_buckets()

buckets = bucket_response[“Buckets”]

- Bucket Creation code

- bucket = s3.create_bucket(Bucket=’boto3bucket852′)

- bucket_response = s3.list_buckets()

- buckets = bucket_response[“Buckets”]

- #Bucket name which avilable

- print(list(buckets))

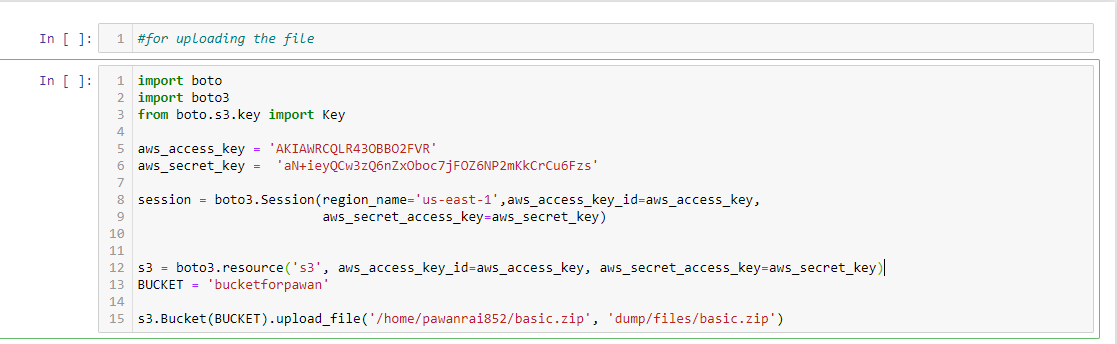

- How to Create a Python File to Upload Local File to

AWS S3

- Import boto and boto3 simply

- Create Session

- /home/pawanrai852/basic.zip is the local path

- ‘dump/files/basic.zip’ is the bucket inside Directory

Code From Above Image for upload local to S3:

- import boto

- import boto3

- from boto.s3.key import Key

- aws_access_key = ‘AKIAWRCQLR4XXXXXXXXXXXX’

- aws_secret_key = ‘aN+ieyQCw3zQ6nZxObxxxxxxxxxxxxxxxxxx’

- session = boto3.Session(region_name=’us-east-1′,aws_access_key_id=aws_access_key,

- aws_secret_access_key=aws_secret_key)

- s3 = boto3.resource(‘s3’, aws_access_key_id=aws_access_key, aws_secret_access_key=aws_secret_key)

- BUCKET = ‘bucketforpawan’

- s3.Bucket(BUCKET).upload_file(‘/home/pawanrai852/basic.zip’, ‘dump/files/basic.zip’)

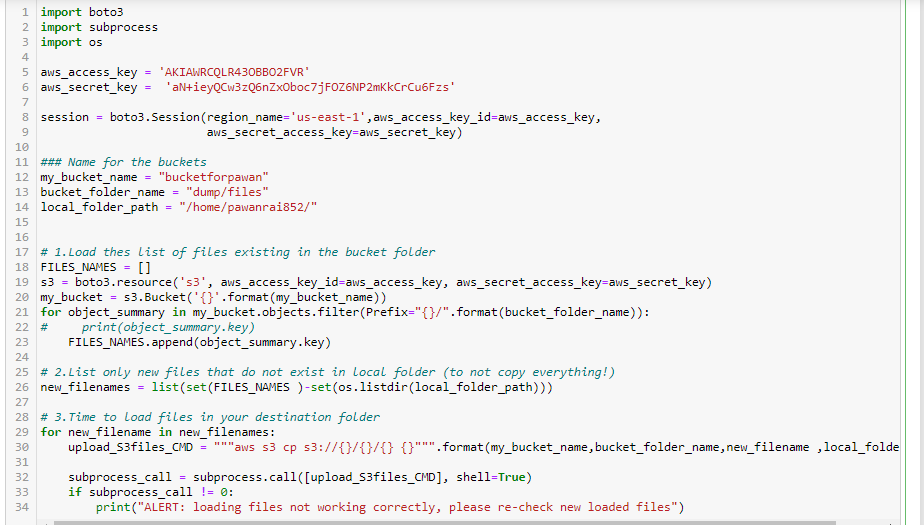

How to Create a Python File to Download S3 Bucket Items?

Code From above Image to Download file from S3:

- import boto3

- import subprocess

- import os

- aws_access_key = ‘AKIAWRCQLR43OBxxxxxxxxxxxxxx’

- aws_secret_key = ‘aN+ieyQCw3zQ6nXXXXXXXXXXXXXXXXXXXXXXXX’

- session = boto3.Session(region_name=’us-east-1′,aws_access_key_id=aws_access_key,

- aws_secret_access_key=aws_secret_key)

- ### Name for the buckets

- my_bucket_name = “bucketforpawan”

- bucket_folder_name = “dump/files”

- local_folder_path = “/home/pawanrai852/”

- # 1.Load thes list of files existing in the bucket folder

- FILES_NAMES = []

- s3 = boto3.resource(‘s3’, aws_access_key_id=aws_access_key, aws_secret_access_key=aws_secret_key)

- my_bucket = s3.Bucket(‘{}’.format(my_bucket_name))

- for object_summary in my_bucket.objects.filter(Prefix=”{}/”.format(bucket_folder_name)):

- # print(object_summary.key)

- FILES_NAMES.append(object_summary.key)

- # 2.List only new files that do not exist in local folder (to not copy everything!)

- new_filenames = list(set(FILES_NAMES )-set(os.listdir(local_folder_path)))

- # 3.Time to load files in your destination folder

- for new_filename in new_filenames:

- upload_S3files_CMD = “””aws s3 cp s3://{}/{}/{} {}”””.format(my_bucket_name,bucket_folder_name,new_filename ,local_folder_path)

- subprocess_call = subprocess.call([upload_S3files_CMD], shell=True)

- if subprocess_call != 0:

- print(“ALERT: loading files not working correctly, please re-check new loaded files”)

Conclusion: Boto3 is the best or sometime very useful when its come to import large data inside a program for processing and then we can also save the processed data again inside the S3 Bucket With the help of the Boto3.

Leave a Reply